Resolution

Contents

Introduction

The spatial resolution of telescopes obtaining images from the ground is generally limited by two things -- the size of the telescope and the quality of the atmosphere ("seeing") on that night. The Palomar 5meter, for example, is a pretty darn big telescope, but the seeing is not always fabulous. The images you obtain from Palomar are often limited by the seeing, and are "smeared out" compared to what you could obtain if, say, there was no atmosphere. If there is no atmosphere, generally the most important thing in determining your spatial resolution is the size of your telescope. That, combined with the wavelength at which you are observing, gives you a diffraction limit (that is, the highest spatial resolution possible given the properties of light and the size of your telescope).

Every telescope in space can produce images limited only by the effects of diffraction -- this effect is stronger for longer wavelengths and smaller telescopes -- but diffraction will only be noticed if the camera on the telescope samples the telescope's output finely enough. Spitzer's images are diffraction-limited. Most of Spitzer's images of point sources show diffraction rings because of the telescope's small size (85 cm) and long observing wavelengths (3-160 um). In practice, what this means is that very bright sources, especially those seeen with MIPS, will appear to have rings around them - this is the first Airy ring, e.g., a result of the way the telescope responds to light of this wavelength. It's not really a ring around the object. (Wikipedia entry for Airy ring, for diffraction, and for diffraction-limited system. Consult your local intro astronomy textbook for more and/or more reliable information.)

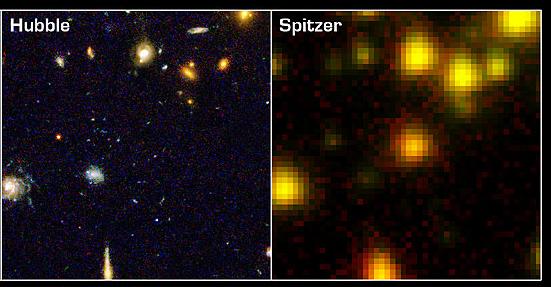

In the picture on the right (taken from this press release), you can see a (small) patch of sky imaged with Hubble (left) and Spitzer (right). The original point of this image was to show how phenomenally sensitive Spitzer is - there is a thing in the center of the Spitzer frame that is not seen by Hubble. There is something else you can learn from this image too. Look at the upper right of the Hubble image. See all the variations in size and shape of the galaxies there? Look at the same region in the Spitzer image. To Spitzer, they all look the same - similarly sized and shaped blobs. Spitzer's telescope is small (just 85 cm) and the wavelengths of light Spitzer uses are long (comparatively), so the resolution of Spitzer is limited.

Spitzer's resolution (and pixel size)

Spitzer's resolution is a strong function of wavelength. The following plot comes from the SOFIA website, and is from at least 2003 if not earlier, so Spitzer is still called SIRTF here.

The functional resolution is in practice a function of two things -- the PSF size, and the pixel size. The PSF changes as a function of wavelength, as does the pixel size. At IRAC bands, the resolution is ~2.5". At MIPS-24, the resolution is about 6 arcsec, at MIPS-70, it's about 20 arcsec, and at MIPS-160, it's about 40 arcsec. The pixel size also increases in size from 1.2" at all four IRAC bands, to 2.55" at 24 um, to 9.96" at 70 um, to 15" (well, really 16"x18") at 160 um. These two things are not separate decisions -- with a big fat PSF at 160 um, we didn't need to have 160 um pixels as small as 1.2 arcseconds.

For those of you looking for connections between concepts... Note that, for IRAC, the PSFs are in general slightly undersampled (e.g., there are slightly less than 2 px per object). This is why PSF fitting is harder for IRAC, and why I usually use aperture photometry for IRAC. PSF fitting works great for MIPS because the PSF is very well-sampled -- there are more than a few pixels per source involved in each detection.

Comparing images of multiple wavelengths

When comparing images at different wavelengths, check that the spatial resolution is comparable! This matters, particularly for mid- and far-IR observations. You will notice this if you use images of different resolutions in a 3-color composite.

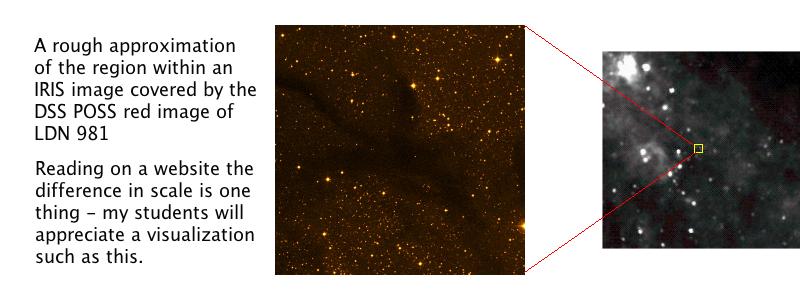

Related to this issue of resolution is the size of images that you can download using any of a number of online resources. For surveys with low spatial resolution, often the default size of the image you can download is MUCH MUCH larger than the default size of an image you can download from a survey with high spatial resolution.

Why does this matter to you? (general case)

When you are comparing the same region of space over multiple wavelengths, even within Spitzer, you need to be aware of this issue. The resolution element ("beam size") of Spitzer is so large that may in fact enclose more than one source. Because the resolution changes even within Spitzer's channels, you can find many examples of cases in which a 160 micron or 70 micron or even 24 micron source encompasses more than one IRAC source. If you look at the same region of space with something with much less spatial resolution (try IRAS or COBE/DIRBE archives), you can see that Spitzer was a huge improvement over those prior missions. If you look at the same region of space with something that has much higher spatial resolution (like HST), you will probably see some things (especially those in complicated regions) break into more pieces. This kind of comparison is of course greatly complicated when you are also comparing across wavelengths, because even the same object at the same resolution can look very different at different wavelengths. Caution is warranted!

Why does this matter to you? (specific case of YSOs)

Example 1.

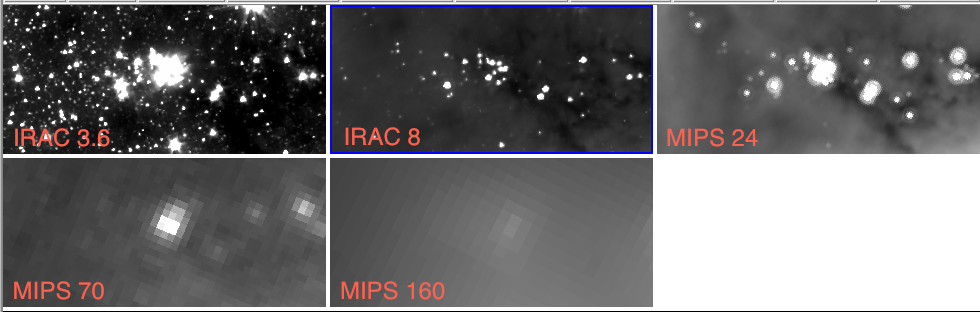

We spend a lot of time in the study of YSOs talking about the rings of dust around the young stars, and how they can become more prominent at longer wavelengths. When you look at the same object as seen with IRAC and MIPS, it will seem as if the source is getting larger at MIPS, and if the source is bright, it may even look as if it has a ring around it. See example here.

These two images are of the same tiny patch of sky, the left one using IRAC and the right using MIPS. Note that there are 'rings' around some of the sources seen in MIPS. THESE ARE NOT THE DUST RINGS AROUND THESE YOUNG STARS. I cannot emphasize this enough. There are only about 10 sources close enough to the Earth such that Spitzer can actually resolve the dusty disk. (here is one, Fomalhaut.) The sources you see in the little images here are not among those 10 close-by sources. What you are seeing here is the difference in resolution between IRAC and MIPS, and the (complicated) shape of the MIPS point-spread-function (PSF, or the way that the telescope+instrument+detectors respond to a point source of light).

The best way I can think of to explain features in the PSF that do not correspond to real, physical features is in pictures like this:

You know, from your own experience, that the lights on this football field are not really gigantic fuzzy blobs with funny purple halo/shadows lurking nearby. This is just how this camera+detector responded to this lighting situation, where these lights are very bright. Same thing in the astronomical images. There are features that are just a result of how the telescope+instrument+detectors respond to a point source of light, and these features are more prominent when the source is very bright.

Example 2.

When you are trying to match the same source across multiple bands, resolution matters. The apparently single point source that you see at 160 microns (or some other band, if you're grabbing non-Spitzer data from somewhere) may in fact combine flux from more than one source which is seen at IRAC bands. You have to be very careful in how you match up sources, assign flux matches for constructing SEDs, and/or apportion flux between two unresolved sources. For example, in theory, if there were two sources within the 160 um beam, you could put some fraction of the 160 micron flux to one source and some fraction to the other source within the beam. But what if the two sources are not the same brightness at 24 microns but they are at 8 microns? How do you decide how much of the 160 um flux to assign to one of the sources? These are complicated issues, and you need to investigate them for the sources you care about, and see if you need to worry about this for your objects.

Here is an example. The tiles are 3.6, 8, 24, 70, and 160 microns. Who gets that 70 micron flux? There's a 160 um flux there too but the PSF is bigger than the field of view. For that matter, who gets some of those 24 um fluxes? Ack!

Example 3.

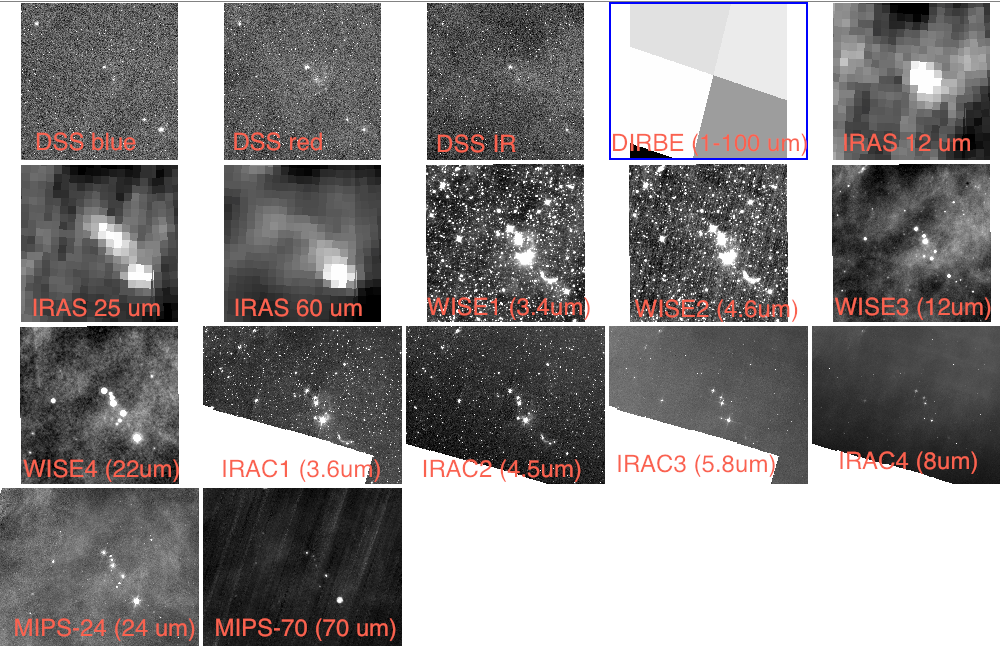

When you are trying to find archival data for the same source, resolution matters. The apparently single point source that you see in your current data may not be appropriate to match to someone's catalog from 15 years ago. Catalogs created from images whose provenance you don't know should be used with caution. You should always try to put eyeballs on the original images if you can (not always possible).

Here is the same little patch of sky at POSS (blue, red, IR), COBE/DIRBE (some sort of combination of 1-100 um scans; yes, those are pixels), IRAS (12, 25, 60), WISE (3.4, 4.6, 12, 22 um), Spitzer (3.6, 4.5, 5.8, 8, 24, 70 um). Look how much the resolution matters. This is 2 point sources in the IRAS catalog (in some bands, you see only one source -- look at the location of the source at 12 compared to 60 -- this is not the same source!). The extended emission in the lower right at WISE is a much different shape than with Spitzer at comparable wavelengths.

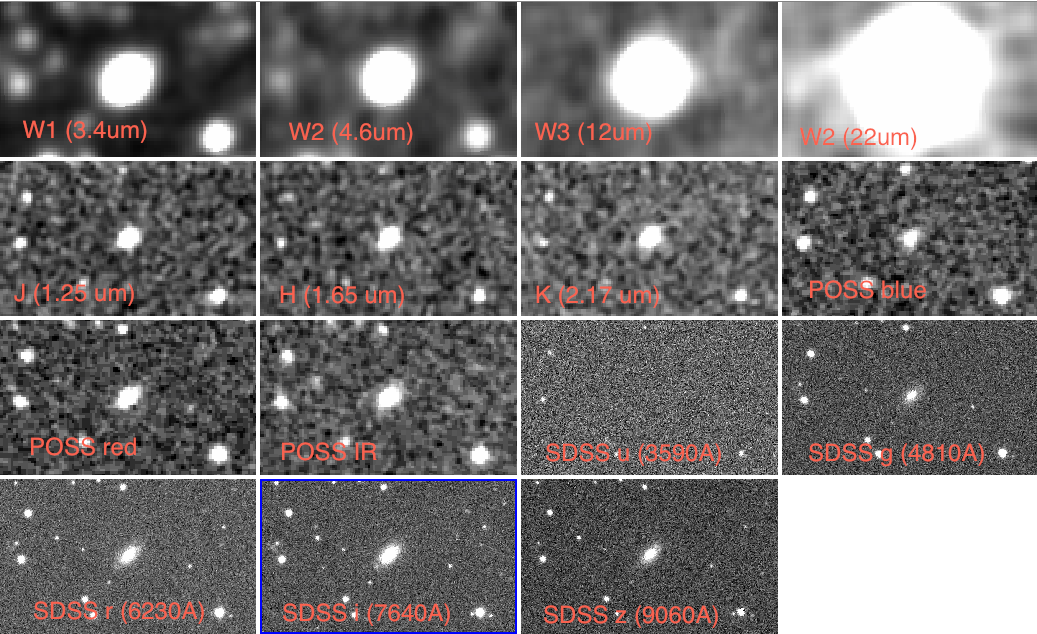

Example 4.

What if you find a cool source that has the right colors to be a young star, and it seems to be a point source at all the bands you have? What if we go and check it in other bands? Guess what I'm gonna say: resolution matters. Here is a real-life example. This object was detected at WISE bands 1, 2, 3, and 4 (3.4, 4.6, 12, and 22 microns), and I found it as having the right colors to be a YSO. The first thing to notice is how the resolution changes when you go even from 12-22 microns, much less 3.4 to 22 microns. This is all on the same RA/Dec scale (e.g., the size of each tile in arcseconds is the same). Just stare at those four for a while. The rest of the tiles here are the same object, on the same scale, seen at 2MASS (JHK), POSS (blue, red, IR), and Sloan (ugriz). What was a point source to WISE is very clearly a nice little elliptical galaxy once you see it in SDSS (though, given its colors, it is probably not Elliptical, in the galaxy classification sense, but most likely it's an edge-on spiral with star formation going on). My color selection is successfully finding star formation, just not star formation here in our galaxy. (if you want to investigate this guy yourself, it's ra=76.8637916667, dec=25.51377778)

Example 5.

This one is a real exercise, in that you will need to go retrieve data, examine it, and think about it. In the CG4/Sa101 paper (Rebull et al. 2011, arXiv 1105.1180), we rejected source 073355.0-464838 as a YSO candidate because the flux density seen at 24 microns is likely contaminated by a nearby source at 8 um. Can you see this source in the images? Do you see why we dropped it? Should we have kept it?

Useful Related Links

Article on resolution from Bad Astronomy - this is in the context of debunking the moon hoax, but resolution issues are important for his discussion.

Another resolution discussion from Bad Astronomy.

Why can Hubble get detailed views of distant galaxies but not of Pluto? by Emily Lakdawalla at the Planetary Society

Questions to think about and things to try having to do with resolution

- What is the size of a typical HST image? How does it compare to a single Spitzer image, or a 'typical' Spitzer mosaic, or a single POSS plate, or the field of view of an optical telescope you have used, all compared with the size of the full moon? How does that compare to the size of a recent comet that visited the inner Solar System? or the size of a spiral arm of the Milky Way? You will have to go find on the web things like the field of view of these telescopes and these objects.

- Can you create a 3-color mosaic using just Spitzer data where the different resolutions of the various cameras is noticeable and important?

- Bonus question: how does the spatial resolution of all those telescopes listed above compare? (e.g., what is the smallest object you can resolve as more than a point source?)

- Are you going to laugh out loud the next time you're watching a crime drama, and someone says, "can you enhance that?" when referring to a blurry black-and-white image from a security camera, and someone else waves a magic wand and suddenly all sorts of small details are visible? (Can I wave my magic wand over that DIRBE image above and ever get that Spitzer/IRAC image?)

- C-WAYS Resolution Worksheet - developed in 2012 for the C-WAYS team

- C-CWEL Resolution Worksheet - developed in 2013 for the C-CWEL team

- HG-WELS Resolution Worksheet - developed in 2014 for the HG-WELS team

- IC 417 Resolution Worksheet - developed in 2015 for the IC417 team