Working with the BRCs

This page is an updated version of the Working with L1688 and Working with CG4+SA101 pages, and was developed and updated specifically for the 2011 BRC team visit. Please note: NONE of these pages are meant to be used without applying your brain! They are NOT cookbooks!

FOR REFERENCE: BRC Bigger Picture and Goals

FOR REFERENCE: File:Brcdvdreadme.txt from the DVD, in case yours is formatted so badly you can't read it. Includes instructions on how to force your computer to read any files with an extension you don't recognize (.tbl, .reg).

Contents

- 1 Downloading the data

- 2 Making the mosaics

- 3 Getting data from other wavelengths

- 4 Investigating the mosaics

- 5 Previously identified sources

- 6 Doing photometry

- 7 Bandmerging the photometry

- 8 Working with the data tables

- 9 Making color-color and color-magnitude plots

- 10 Investigating the images of the objects

- 11 Making SEDs

- 12 Literature again

- 13 Analyzing SEDs

- 14 Writing it up!

Downloading the data

9/15/11: done for both BRC 27 and BRC 34

How do I download data from Spitzer? has a wide variety of flavors of tutorials. The second formal chapter of the professional astronomer's Data Reduction Cookbook ultimately comes from last year's NITARP project. I haven't developed one customized to your project, because this year it's easier.

Big picture goal: Get you comfortable enough to search for your own favorite target, understand what to do with the search results, and download data.

More specific shorter term goals: Search on our targets. Understand the difference between the observations. Understand why I chose to use the observations that I did.

Relevant links: How do I download data from Spitzer? and SHA

Questions for you:

- Compare the various AORs you get as your search results when you search by position. How are they the same/different? Which do we want to download?

Making the mosaics

9/15/11: done for both BRC 27 and BRC 34

In the generic case for most targets, you can probably use the online mosaics that come as PBCD (Level 2) mosaics (or delivered products, if they exist for the region you want -- see "inventory search" in the SHA). In this case, we can use the online mosaics.

Big picture goal: Recognize at a glance what is an instrumental artifact and what is real.

More specific shorter term goals: Look at the online mosaics. Understand what is part of the sky and what is not. Understand which I reprocessed and why.

Relevant links: What is a mosaic and why should I care? and Resolution. Why does it matter to know what is an artifact and what is not? So you don't get fooled by stuff like this.

Questions for you:

- Compare the mosaics across the bands. What changes? What stays the same? Why?

- What is saturated? What are some other instrumental effects you can see?

- Notice the pixel scale. What is the real pixel scale of IRAC (and MIPS)? What are the pixel scales of the images? Does that actually change the resolution? (for advanced folks - why did we do this?)

Getting data from other wavelengths

9/15/11: NOT COMPLETELY done for both BRC 27 and BRC 34, but also may be skipable. The Haleakala data also count as 'from other wavelengths'.

You have already made some progress on this in your literature search this Spring, but there are a TON more data we can mine.

Big picture goal: Understand how to use the various archives to find non-Spitzer data.

More specific shorter term goals: Go get data for both BRCs for comparison to our Spitzer data, both images and catalogs. Specifically investigate the WISE archive.

Relevant links: How can I get data from other wavelengths to compare with infrared data from Spitzer? and Resolution Also: Access the WISE archive directly here, and see a step-by-step WISE archive tutorial from Berkeley here.

Questions for you:

- Figure out how to get data from Akari, WISE, 2MASS, MSX, IRAS, IPHAS, POSS, SDSS (NB: both clouds may not have hits, and some surveys might not cover both -- or either -- clouds), and anyplace else you want. Which will give you images, and which will give you catalogs (not all will give you both)? Go do it. For images, if you are using Skyview from Goddard, make sure to worry about pixel scale. Best to try to go direct to the source for these archives, rather than relying on Goddard. Get images covering about the same area as the Spitzer images so that they are easy to compare, but larger scale images might be useful to give a sense of context too.

- For each catalog: What wavelength is this? How is it relevant to YSOs? How is the resolution different? (You may need to do the next section before you can answer this.)

Luisa's BRC task notes (e.g., some notes on the answers I am expecting you to get! don't peek until you've tried; you might find different information than I did!)

Investigating the mosaics

9/15/11: basically done for both BRC 27 and BRC 34. we will revisit for specific sources.

It is "real astronomy" to spend a lot of time staring at the mosaics and understanding what you are looking at. Don't dismiss this step as not "real astronomy" just because you are not making quantitative measurements. This is time well-spent.

Big picture goal: Understand what is seen at each Spitzer band and all the other archival bands.

More specific shorter term goals: Recognize how the images differ between the two BRCs, and among the various bands.

Relevant links: How can I make a color composite image using Spitzer and/or other data? and the questions on that page.

Questions for you, among just the Spitzer images:

- How does the number of stars differ across the bands? Which band has the most stars? The fewest? (Bonus question: why?) The most nebulosity? The least? (Bonus question: why?) Are there more stars in the regions of nebulosity, or less? Why?

- What other features are the same across the bands?

- Do the star counts differ between the two BRCs? Why?

- Which objects are saturated, in which bands?

- How big are any of the features in the image (nebulosity, galaxy, space between objects)? (What do I mean by big?) in pixels, arcseconds, parsecs, and/or light years? (Hint: you need to know how far away the thing is. If it helps, there are 3.26 light years in a parsec.)

- Make a three-color image. What happens when you include a MIPS-24 mosaic in as one of the three colors with IRAC as the other two? Do the stars match up? Does the resolution matter? Can you tell from just a glance at the three-color mosaic which stars are bright at MIPS wavelengths?

Questions for you, among all bands you can find:

- Figure out how to get imaging data from WISE, 2MASS, MSX, IRAS, POSS, and anyplace else you want. (See prior task too.) Line them up with the Spitzer images of comparable wavelengths (e.g., 8 um with 12 um, 25 um with 24 um). How much more detail do you see with Spitzer that was missed by IRAS or the other missions? Do you see more texture in the nebulosity? More point sources? How does the resolution and sensitivity vary?

- Which features are found across multiple wavelengths? Why?

Previously identified sources

9/15/11: mostly done for both BRC 27 and BRC 34. we are on the home stretch as of 15 sep

You've already started to do this as part of our proposal and spring work.

Big picture goal: Understand what has already been studied and what hasn't in the image.

More specific shorter term goals: Determine if the previously-known objects are saturated or not. Get some numbers so that you are ready to do photometry on them (in the next step).

Relevant links: How can I find out what scientists already know about a particular astronomy topic or object? and I'm ready to go on to the "Advanced" Literature Searching section and BRC Spring work (bottom of that page), specifically File:Luisa-mergedbrc27.txt. luisa's region file of these objects (for use with ds9 -- NOTE THAT windoze computers will misinterpret the .reg file extension, so i've changed it to reg.txt!): File:Luisa-mergedbrc27.reg.txt

BRC 27 known objects with X and Y position coordinates ... File:XyLuisa-mergedbrc27.xls --CJohnson 22:54, 6 July 2011 (PDT)

NEW (4/2011) resource: YouTube video on how to take antiquated coordinates from one of our literature papers and use 2MASS to get updated current, correct coordinates for each object.

Questions for you:

- For each of the known objects, you have the RA/Dec - find the objects in the image. What are the pixel coordinates in the image? Does it change among the IRAC bands? In the MIPS band?

- For each of the known objects, you have the RA/Dec - find the objects in the catalog. Which Spitzer catalog objects are the matches?

Luisa's BRC task notes (e.g., some notes on the answers I am expecting you to get! don't peek until you've tried; you might find different information than I did!)

July: BIG PENDING ISSUE FOR HOMEWORK(?): are the duplicates you found REALLY duplicates on the sky? The computer said some were duplicates, and some ended up at the same position (apparently) but with different data. What is it really, on the sky? How are you going to tell if there are really sources there? (Hint: go get 2mass images of these regions and make REALLY sure there is really only one source there, or there are really two.)

UPDATE SEP 2011 Identification of Previously Known Objects on Candidate List tracks a lot of conversation about which objects are which.

Doing photometry

9/15/11: basically done for both BRC 27 and BRC 34. we will revisit this step for specific sources

OK, this step is doing to take the longest, be the most complex, involve the most steps and the most math.

Never just trust that the computer has done it right. It probably did what you asked it to do correctly, but you asked it to do the wrong thing. Always make some plots to test and see if the photometry seems correct.

Big picture goal: Understand what photometry is, and what the steps are to accomplish it. Understand the units of Spitzer images. Understand how to assess if your photometry makes sense.

More specific shorter term goals: Do photometry on a set of mosaics for the same (small) set of sources. Assess whether your photometry seems right. We should decide as a group which set of sources to measure, and have everyone measure the same sources. We will then compare all of our measurements among the whole group.

Relevant links: Units and Photometry and I'm ready to go on to a more advanced discussion of photometry and Aperture photometry using APT, specifically this, which is the closest thing to a cookbook I will give you.

NEW (5/2011) resource: YouTube video on using APT, including calculating the number APT needs. (15 min because it starts from software installation and goes through doing photometry.)

NEW 7/7/11 -- region files for just i1, just i2, just i3, just i4, and 'final best catalog of everything with a valid detection somewhere':

- File:Justirac1sources.reg.txt

- File:Justirac2sources.reg.txt

- File:Justirac3sources.reg.txt

- File:Justirac4sources.reg.txt

- File:Allbandmergedsources.reg.txt

AND, File:Fred.xls, the file in which we were collecting everyone's measurements.

UPDATE SEP 2011 Identification of Previously Known Objects on Candidate List tracks a lot of conversation about which objects are which, which then feeds into Matching to Spitzer and Weeding the SEDs which talks about photometry for a smaller set of objects.

Questions for you:

- Use APT to explore the various parameters. What is a curve of growth?

- What are the best parameters to use? (RTFM to find what the instrument teams recommend.) What are the implications of those choices? What happens if you use other choices?

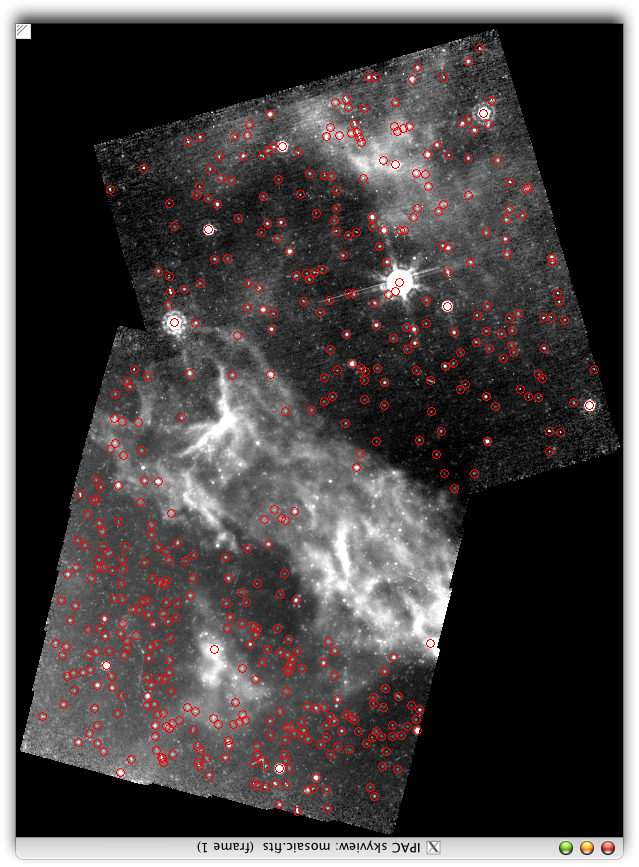

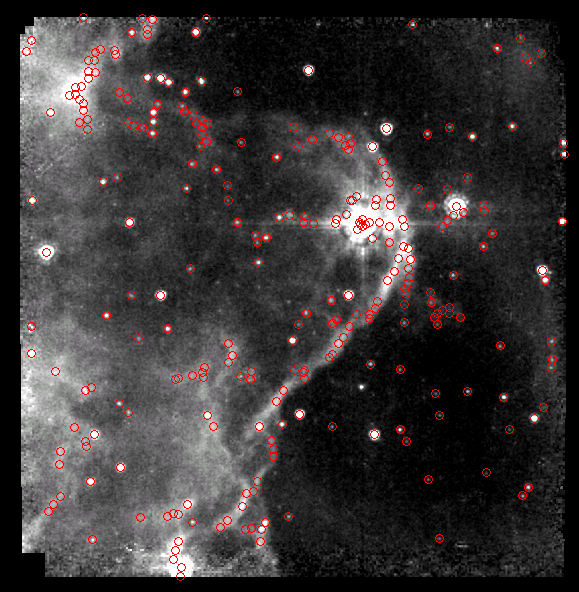

- Compare the MOPEX source identifications I did from just one band with their corresponding image. Is it getting fooled by detector artifacts? you have the tbl files, as opposed to region files, from me for this. you can use SHA to load tbl files over images, or another standalone software package called skyview. Let me know if you want the reg files and I'll make you some.

- Compare the MOPEX source identifications from, say, IRAC band 3 with the image from IRAC band 1, or the source extractions from MIPS-24 with image from IRAC band 1. Are there a lot of stars (or other objects) in common? How does the nebulosity affect it? you have the tbl files, as opposed to region files, from me for this. you can use SHA to load tbl files over images, or another standalone software package called skyview. Let me know if you want the reg files and I'll make you some.

- Why did either of these things happen when I ran automatic source detection in MOPEX? (see below)

Bandmerging the photometry

9/15/11: done for both BRC 27 and BRC 34, though we may need to revisit for certain objects, particularly those from earlier observations that should be tied to more than one object.

I use my own code to do this; there is no pre-existing package to do this. If you do it by hand (or semi-by-hand) using APT, you can manually merge the photometry. My merged photometry includes J through M24.

Big picture goal: Understand what this process is.

More specific shorter term goals: Do this by hand.

Relevant links: Resolution

Questions for you:

- Make sure that I've merged the right sources across several bands by spotchecking a few of them. (Find an object that the catalog says is detected in at least 3 bands and then overlay the images in a 3-color image or Spot to see if there is really a source there, at exactly that spot, in all bands, or if it's a cluster of objects, or different objects getting bright at different bands.

- Have I 'lost' the instrumental artifacts you noticed in the previous section? Or are there instrumental artifacts or otherwise false sources sill in the list?

- Does resolution matter? (Can you find a place where more than one IRAC source can be matched to the same MIPS source?)

- Can you start merging in information from other bands (see tasks above)? Be very careful about resolution!!

UPDATE SEP 2011 Identification of Previously Known Objects on Candidate List tracks a lot of conversation about which objects are which, which then feeds into Matching to Spitzer and Weeding the SEDs which talks about photometry for a smaller set of objects.

Working with the data tables

9/15/11: somewhat done for at least BRC 27. Will need to redo as repercussions of recent changes above propagate forward.

OK, fair warning, math involved here too. And programming spreadsheets!

Big picture goal: Understand how to work with the tables. Understand how to convert magnitudes back and forth to flux densities.

More specific shorter term goals: Import the table into excel. Program a spreadsheet to convert between mags and flux densities.

Relevant links: Units and Skyview but lots of important words actually on the L1688 page itself, sorry. See also Central wavelengths and zero points.

NEW (5/2011) resource for understanding how to do this: YouTube video on what tbl files are, how to access them, and specifically how to import tbl files into xls. (10min)

Make sure you understand how I got the magnitudes from the fluxes (or the fluxes from the magnitudes). You will need magnitudes for the next step, and fluxes for the SED steps after that.

Questions for you:

- How many stars are detected in each band? Is this about what you expected based on your answer to the questions in the mosaic section above? HINT: you can do this using Excel, you don't need to count these manually!! Ask if you need a further hint on exactly how to do this.

- Which stars in the catalog are the stars identified in the literature?

UPDATE SEP 2011 Identification of Previously Known Objects on Candidate List tracks a lot of conversation about which objects are which, which then feeds into Matching to Spitzer and Weeding the SEDs which talks about photometry for a smaller set of objects.

Making color-color and color-magnitude plots

9/15/11: somewhat done for at least BRC 27. Will need to redo as repercussions of recent changes above propagate forward.

Big picture goal: Understand what plots to make. Understand the basic idea of using them to pick out certain objects.

More specific shorter term goals: Make some plots. Understand the basic approach of Gutermuth et al. (see Gutermuth et al. 2009, Appendix A)

Relevant links: Color-Magnitude and Color-Color plots and Finding cluster members and Color-color plot ideas and Gutermuth color selection

Questions for you:

- Pick a diagnostic color-color or color-magnitude plot to make. Does my photometry seem ok?

- Pick at least one color-color or color-magnitude plot to make. Figure out a way to ignore the -9 (no data) flags. Where are the plain stars? Where are the IR excess objects?

- Where are the famous objects in the plot? Where are the new YSO candidates I used the Gutermuth method to find?

- Make a new column in your Excel spreadsheet with some colors. Is there a way you can get Excel to tell you automatically which objects have an IR excess? Can you implement the Gutermuth selection? (You may not be able to do so.)

- Make the plots that go into the Gutermuth selection, including the relevant lines on the plot.

- Of the objects I have that fit the Gutermuth criteria, are any of them false or otherwise bad sources? How can you tell?

- Bonus but very important question: How do you know that some of these sources aren't galaxies? Can you find something that is obviously a galaxy on the images? Can you think of a way using public data that already exist to check on the "galaxy-ness" of some of these objects?

NEW 7/8/11: File:Fridayafternoon.pdf -- pdf of ppt from friday afternoon 7/8/11. Includes Venn diagram of what we've been doing the last few days.

Investigating the images of the objects

9/15/11: somewhat done for BRC 27. we will revisit for specific sources as the recent updates above propagate forward.

Big picture goal: Understand why we need to look at the images of each of our short list of candidates.

More specific shorter term goals: Figure out how to get thumbnails and/or find these things in our images. Calibrate your eyeball for the various images/resolutions/telescopes to figure out what is extended and what isn't. Drop the bad objects off our candidate YSO list.

Relevant links: How can I get data from other wavelengths to compare with infrared data from Spitzer? and Resolution (specifically some of the concrete examples there) and IRSA finder chart

NEW (5/2011) resource for understanding how to do use finder chart to examine the images of various candidates in bands other than Spitzer: YouTube video on using Finder Chart. To use these images to also examine the original Spitzer images, load them (and the Spitzer images) into ds9, pick one of the small finder chart images, and then pick 'Frame/Match/Frame/WCS'. All will snap to alignment with North up, on the same scale, with the object in the center.

Questions for you:

- Which objects are still point sources at all available bands?

- Which are instrumental artifacts? Or MOPEX hiccups?

- Which might have corrupted photometry?

- Which are correctly matched to literature values (or correctly identified as duplicates)? You'll need to go back to the literature above to check this.

UPDATE SEP 2011 see Matching to Spitzer and Weeding the SEDs which talks (will talk) about examining images for a smaller set of objects.

Making SEDs

9/15/11: somewhat done for at least BRC 27. Will need to redo as repercussions of recent changes above propagate forward.

WARNING: lots of math and programming spreadsheets here too.. you WILL do this more than once to get the units right!

Big picture goal: Understand what an SED is and why it matters.

More specific shorter term goals: Make at least one SED yourself. Examine the SEDs for all of our candidate objects. Use them to reassess our photometry if necessary, and to drop the bad objects off the YSO candidate list.

Relevant links: Units and SED plots and Studying Young Stars and for that matter the detailed object-by-object discussion in the appendix of the cg4 paper. See also Central wavelengths and zero points

Pick some objects to plot up, maybe some of the previously-identified ones from above would be a good place to start, or the ones you flagged above as having an IR excess. Start with just one. It will take time to get the units right, but once you do it right the first time, all the rest come along for free (if you're working in a spreadsheet). Spend some time looking at the SEDs. Look at their similarities and differences. Identify the bad ones, and discuss with the others why/whether to drop them off the list of YSO candidates. See also stuff above about data at other wavelengths, and include literature/archival data from other sources where appropriate and possible.

Questions for you:

- What do the IR excesses look like in your plots? Do they look like you expected? Like objects in CG4 or elsewhere?

- Make some SEDs of things you know are not young stars. What do they look like?

- Which objects look like they have 1 or 2 bad photometry points? Go back and check the photometry for them.

- Which objects look like clear YSO SEDs? Which objects do not?

- Any photometry look bad? Go back and check it!

- Any objects within the maps but undetected? Go back and get limits and add those too!

--Legassie 15:20, 8 July 2011 (PDT) TIPS ON CREATING SED PLOTS USING EXCEL: File:SED PLOT EXAMPLE.XLSX

UPDATE SEP 2011 see Matching to Spitzer and Weeding the SEDs which talks (will talk) about examining a smaller set of objects in great detail.

Literature again

9/15/11: not really done yet.

This step is important for this particular project, because of the nature of the existing literature for the objects we are studying.

Big picture goal: Understand at least the basics of how what we did is different than what Chauhan et al. did with the IRAC data.

More specific shorter term goals: Knowing what you do now, go back and reread Chauhan et al. Do a detailed comparison of our method for finding young stars and that from Chauhan et al.

Relevant links: How can I find out what scientists already know about a particular astronomy topic or object? and I'm ready to go on to the "Advanced" Literature Searching section and BRC Spring work.

Questions for you:

- What are the steps (cookbook-style) that Chauhan et al. used to find YSOs?

- What were our steps?

- How are they different?

- Does our IRAC photometry agree within errors? (That "within errors" is very important...)

- Did we find the same specific sources as they did? Did we find more or fewer? or exactly the same? Did we recover all of theirs? Why or why not?

- Which method do you think works better?

- NON-CHAUHAN: Did we recover all of the young stars identified by Ogura or Gregorio-Hetem or any of the other papers? Why or why not?

- NON-CHAUHAN: Are any of our surviving YSO candidates listed in SIMBAD for any reason? Are they still likely YSOs, or have they shown up as galaxies there?

Analyzing SEDs

9/15/11: not done yet, and may be skippable.

This is advanced, and we may not get here.

Add a new column in Excel to calculate the slope between 2 and 8 microns in the log (lambda*F(lambda)) vs log (lambda) parameter space. This task only makes sense for those objects with both K band and IRAC-4 detections. (For very advanced folks: fit the slope to all available points between K and IRAC-4 or MIPS-24. How does this change the classifications?)

- if the slope > 0.3 then the class = I

- if the slope < 0.3 and the slope > -0.3 then the class = 'flat'

- if the slope < -0.3 and the slope > -1.6 then class = II

- if the slope < -1.6 then class = III

These classifications come from Wilking et al. (2001, ApJ, 551, 357); yes, they are the real definitions (read more about the classes here)!

- How many class I, flat, II and III objects do we have?

- Where are the objects with infrared excesses located on the images? Are all the Class Is in similar sorts of locations, but different from the Class IIIs?

For very advanced folks: suite of online models from D'Alessio et al. and suite of online models from Robitaille et al.. Compare these to the SEDs we have observed.

Writing it up!

9/15/11: not done yet.

We need to write an AAS abstract and then the poster, and if we're lucky, a paper!

We need to include:

- How the data were taken.

- How the data were reduced.

- What the Spitzer properties are of the famous objects, including how the Spitzer observations confirm/refute/resolve/fit in context with other observations from the literature.

- What the Spitzer properties are of other sources here, including objects you think are new YSOs (or objects you think are not), and why you think that.

- How this region compares to other regions observed with Spitzer.

Take inspiration for other things to include from other Spitzer papers on star-forming regions in the literature.

Education Poster Abstract. version 1.0 As part of the NASA/IPAC Teacher Archive Research Project program (NITARP), four high school teachers have participated with two to four students in a science research project using archival Spitzer data to search for young stellar objects in two bright-rimmed clouds: BRC 27 and BRC 34. Our research findings are presented in another poster, Rebull et al. These teachers are from Breck School, Carmel Catholic High School, Glencoe High School, and Pine Ridge High School. A key initiative in science education is integrating authentic scientific research into the curriculum. Since the NITARP program can only fund a limited number of teachers and students, our group has investigated the role of team leaders (both teachers and students) in educating and inspiring other teachers and students. This project allows our students to assume an active role in the process of project development, teamwork, data collection and analysis, interpretation of results, and formal scientific presentations. This poster presents our research on how the students who are chosen as the team leaders disseminate the information to other students within the school as well as to other schools and interest groups. Since three of the four teachers are women, we have also looked at how these teachers inspire young women to participate in this program and to pursue a STEM (Science, Technology, Engineering, and Math) careers. This program was made possible through the NASA/IPAC Teacher Archive Research Project program (NITARP) and was funded by NASA Astrophysics Data Program and Archive Outreach funds. --Linahan

version 1.1 As part of the NASA/IPAC Teacher Archive Research Project program (NITARP), four high school teachers have participated with selected students in a research project using archival Spitzer data to search for young stellar objects in two bright-rimmed clouds: BRC 27 and BRC 34. Our research findings are presented in another poster, Johnson et al. A key initiative in science education is integrating authentic scientific research into the curriculum. Since the NITARP program funds a limited number of teachers and students, our group has investigated the role of team leaders (both teachers and students) in educating and inspiring other teachers and students. This project allows our students to assume an active role in the process of project development, teamwork, data collection and analysis, interpretation of results, and formal scientific presentations. This poster presents our research on how the student team leaders disseminate the information to other students within the school, as well as to other schools and interest groups. Since three of the four teachers are female, we have also looked at how these teachers inspire young women to participate in this program and to pursue a STEM (Science, Technology, Engineering, and Math) careers. This program was made possible through the NASA/IPAC Teacher Archive Research Project program (NITARP) and was funded by NASA Astrophysics Data Program and Archive Outreach funds.

If it would be easier, we can work with a Word document. Please let me know your preference. --CJohnson 10:53, 21 September 2011 (PDT)

Science Poster Abstract.

version 1.0

Found near the edges of HII regions, bright-rimmed clouds (BRCs) are thought to be home to triggered star formation. Using Spitzer Space Telescope archival data, we investigated BRC 27 and BRC 34 to search for previously known and new additional young stellar objects (YSOs). BRC 27 is located in the molecular cloud Canis Majoris R1, a known site of star formation. BRC 34 has a variety of features worthy of deeper examination: dark nebulae, molecular clouds, emission stars, and IR sources. Our team used archival Spitzer InfraRed Array Camera (IRAC) and Multiband Imaging Photometer for Spitzer (MIPS), combined with 2-Micron All-Sky Survey (2MASS) data as well as optical data from XXX. We used infrared excess to investigate the properties of previously known YSOs and to identify additional new candidate YSOs in these regions. This research was made possible through the NASA/IPAC Teacher Archive Research Project (NITARP) and was funded by NASA Astrophysics Data Program and Archive Outreach funds. --CJohnson 11:19, 21 September 2011 (PDT)

Author List. from Breck School (Minneapolis, MN): Chelen H. Johnson, Nina G. Killingstad, Taylor S. McCanna, Alayna M. O'Bryan, Stephanie D. Carlson, Melissa L. Clark, Sarah M. Koop, Tiffany A. Ravelomanantsoa

--CJohnson 11:24, 21 September 2011 (PDT)